Startup Artemis Networks has a technology it believes can take wireless

networks to the next level. And the company may soon have the

opportunity to prove it. Dish Network is making possible the world’s

first pCell wireless technology deployment.

Through its wholly-owned subsidiary American H Block Wireless, Dish is planning to hand over some H Block mobile

spectrum in San Francisco to Artemis for up to two years for a field

test. The only hurdle is FCC approval -- Artemis has to get the

commission's OK to move forward with the test. A new approach to

wireless, pCell has the potential to be revolutionary.

Indoor testing has already demonstrated it can deliver full-speed mobile

data to every mobile device at the same time -- no matter how many

users are sharing the same spectrum. The end result: greater capacity

than conventional LTE. The most advanced conventional LTE networks

average 1.7 bps/Hz in spectral efficiency. By contrast, pCell posts an

average of 58 bps/Hz. That's 35 times faster than conventional LTE.

Will it Really Work?

"The Artemis I Hub enables partners to test pCell in indoor and venue

scenarios using off-the-shelf LTE devices, such as iPhone 6/6 Plus, iPad

Air 2 and Android devices,” said Steve Perlman, Artemis founder and

CEO.

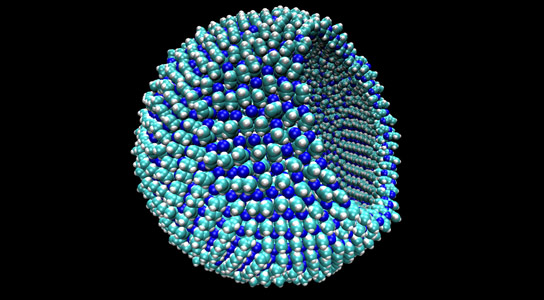

Here’s how it works: Instead of avoiding interference like conventional

wireless technologies, pCell technology actually exploits interference.

The technology combines interfering radio waves to create an unshared

personal cell, or pCell, for each LTE device. This sets the stage to

provide the full wireless capacity to each user at once, even at

extremely high user density, according to the company.

We asked Jeff Kagan, an independent technology analyst, for his take on

pCell. He told us it’s an interesting idea. Of course, we still don't

yet know whether it will work in real world operations, he added.

“If it does work as advertised, it could alleviate some of the pressures

on traditional networks like LTE in areas like stadiums where there are

large groups in a small area. Of course this is not automatic," he

said.

Stretching the Limits

Indeed, customers still have to insert Artemis SIM cards into LTE

devices to take advantage of the service -- unless they have devices

that carry the new universal SIM. In that case, consumers would choose

Artemis as their LTE service on the screens of their devices. The

devices would then connect to Artemis pCell service as they would to any

LTE service. However, most consumers don’t have devices that carry the

universal SIM.

“This is an idea that is needed as we stretch the limits of the way we

currently provide wireless data,” Kagan said. “This also inserts another

company into the mix -- a company that will charge for its services. We

really have more questions than answers today, but it's an interesting

new approach.”

Beyond the Dish news, Artemis is also rolling out the Artemis I Hub for

venue and indoor trials. The Artemis I Hub provides pCell service

through 32 distributed antennas and promises to deliver up to 1.5 Gbps

in shared spectrum to off-the-shelf LTE devices, with frequency agility

from 600 MHz to 6 GHz. That would enable pCell operation in any mobile

band.

Tech giant Google wants to save Internet users from themselves. The

company's Chrome Web browser will now warn users before they visit sites

that might encourage them to download programs or malware that could

cripple their computers or otherwise interfere with their Web-browsing

experience.

Tech giant Google wants to save Internet users from themselves. The

company's Chrome Web browser will now warn users before they visit sites

that might encourage them to download programs or malware that could

cripple their computers or otherwise interfere with their Web-browsing

experience.